A Fusion Of Llm And Rl.

This is an automatically translated post by LLM. The original post is in Chinese. If you find any translation errors, please leave a comment to help me improve the translation. Thanks!

Recently, Tsinghua University and SenseTime published an article titled "Ghost in the Minecraft: Generally Capable Agents for Open-World Environments via Large Language Models with Text-based Knowledge and Memory," abbreviated as GITM. It's quite interesting, and interested friends can read the original article.

In this article, the authors use large language models to guide the behavior of intelligent agents and train them using language as an interactive medium. As a result, they achieved very good experimental results in the Minecraft environment after training for two days on a 32-core CPU. They also unlocked all the tech trees in the game Minecraft during the training process.

Background

First, let's introduce the background of the work done by GITM. The problem this work aims to solve is to continuously learn from the beginning of the game initialization in the Minecraft game environment to achieve the goal of mining diamonds. In the game Minecraft, diamonds are usually buried at a certain depth underground. To mine a diamond ore, at least an iron pickaxe is required. To craft an iron pickaxe, iron is needed, which requires mining iron ore and mastering the technology of smelting iron. Mining iron ore requires a stone pickaxe, crafting a stone pickaxe requires stone, and crafting stone requires wood and a crafting table.

And this is just the tech tree for mining tools. In the game Minecraft, you also need to continuously replenish food to heal and prevent hunger, craft weapons and armor to protect yourself, which puts high demands on the various abilities of the intelligent agent.

The idea of reinforcement learning is that the intelligent agent observes the environment, generates actions based on the observed data, the environment changes correspondingly after the agent performs the action, and the environment provides the agent with a reward signal. However, in the Minecraft environment, due to its high degree of freedom and deep tech tree, reinforcement learning has always performed poorly in this environment.

GITM

When solving this problem, GITM did not adopt the reward-guided action approach of reinforcement learning, but used a large language model for task decomposition and planning, and finally interacted with the environment through an interface. Specifically, GITM consists of three parts: LLM Decomposer, LLM Planner, LLM Interface. Next, let's introduce these three parts separately.

LLM Decomposer

The role of LLM Decomposer is to decompose the goal. First, define the goal. In Minecraft, each goal can be defined as a five-tuple: \[ (Object, Count, Material, Tool, Info) \] where \(Object\) refers to the target item, \(Count\) specifies the quantity, \(Material\) and \(Tool\) indicate the prerequisites for obtaining the item, and \(Info\) describes the text knowledge related to the target item. After receiving a goal input, LLM Decomposer can decompose the goal based on its prerequisites, generating subtasks with \(Material\) and \(Tool\) as \(Object\). This decomposition process can be recursive until the subgoals generated have no prerequisites.

This part can be implemented using a large language model, and the article also provides specific prompts. Interested friends can try it with chatGPT, and the effect is quite good.

LLM Planner

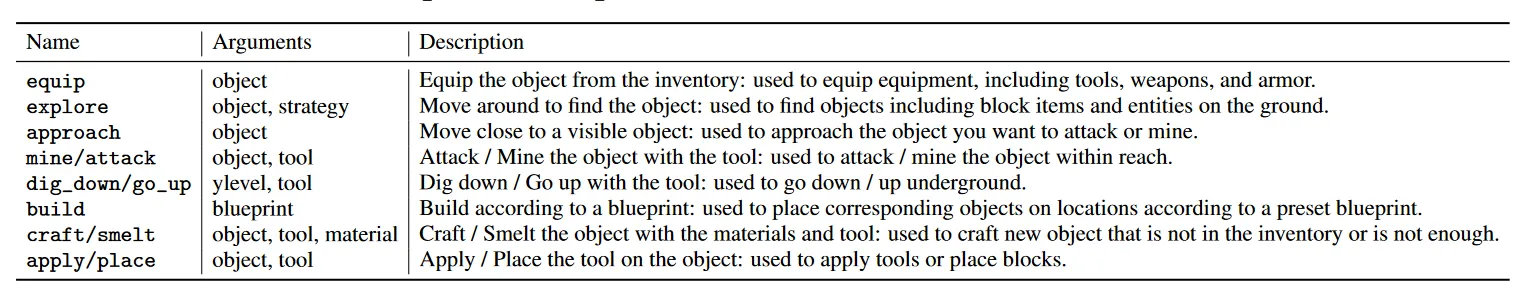

The role of LLM Planner is to decompose a given task into a combination of structured actions, which are basic actions defined in Minecraft that can be easily implemented through scripts. Each action consists of three parts: \[ (Name, Arguments, Description) \] The list of structured actions is shown in the table below:

LLM Interface

The main role of LLM Interface is to convert text-based actions provided by the Planner into actions that can interact with the environment. There are mainly two ways to achieve this: manually written scripts and RL learning models. Due to the well-defined structured actions in Minecraft, a script written by a person is used to control the actions.

At this level, a language model is also used to decompose actions. First, after receiving a goal (e.g., "find material and craft an iron pickaxe"), the language model decomposes it into a tree-structured plan with a depth of at most 2.

After that, for each leaf node in the tree plan, it is converted into specific action descriptions ('verb', 'object', 'tools', 'materials') through the language model.

Discussion on Intelligence

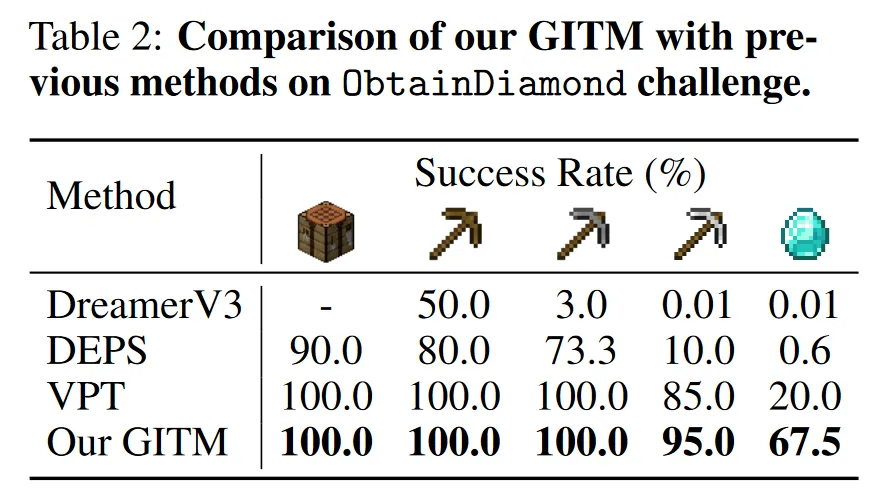

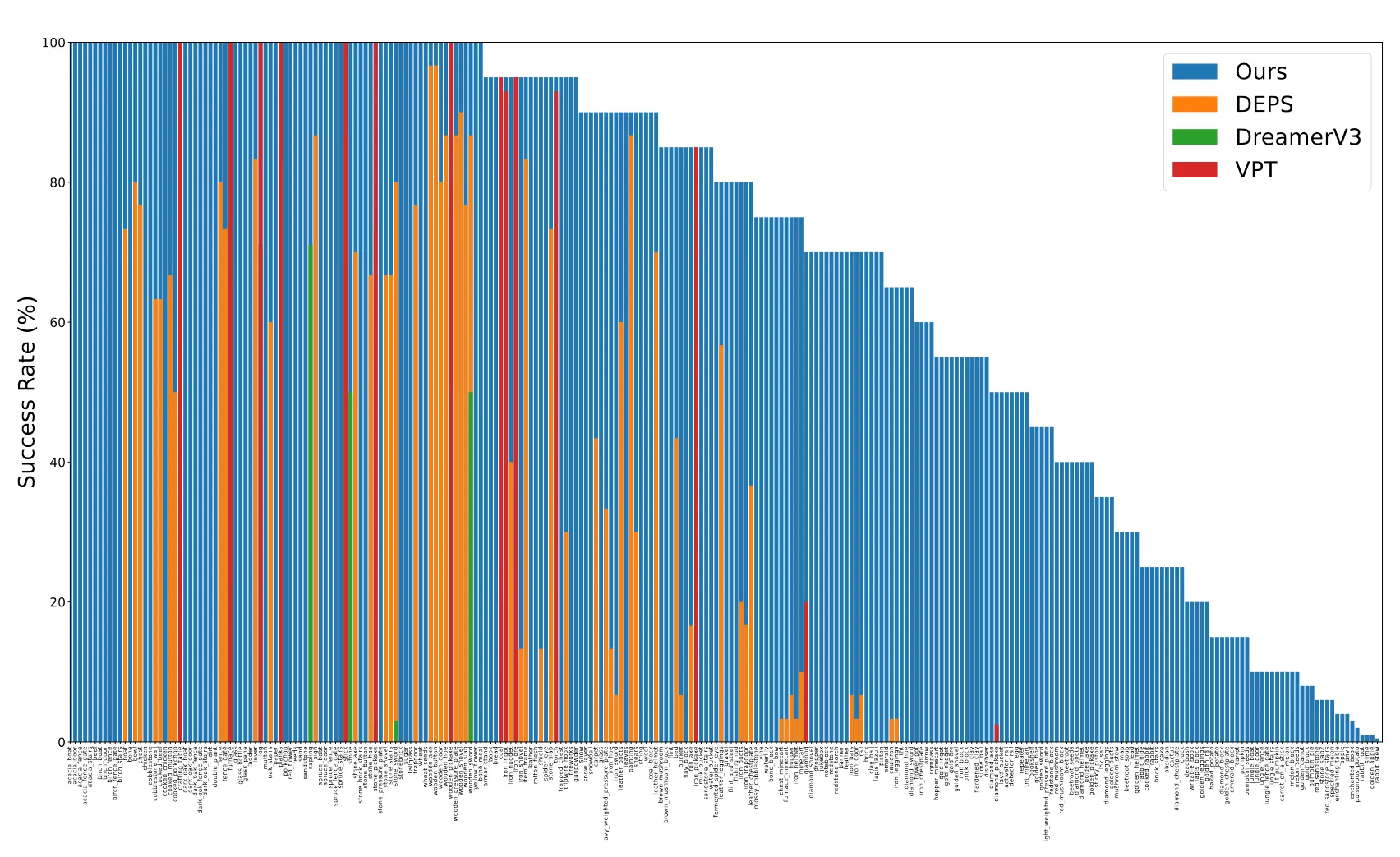

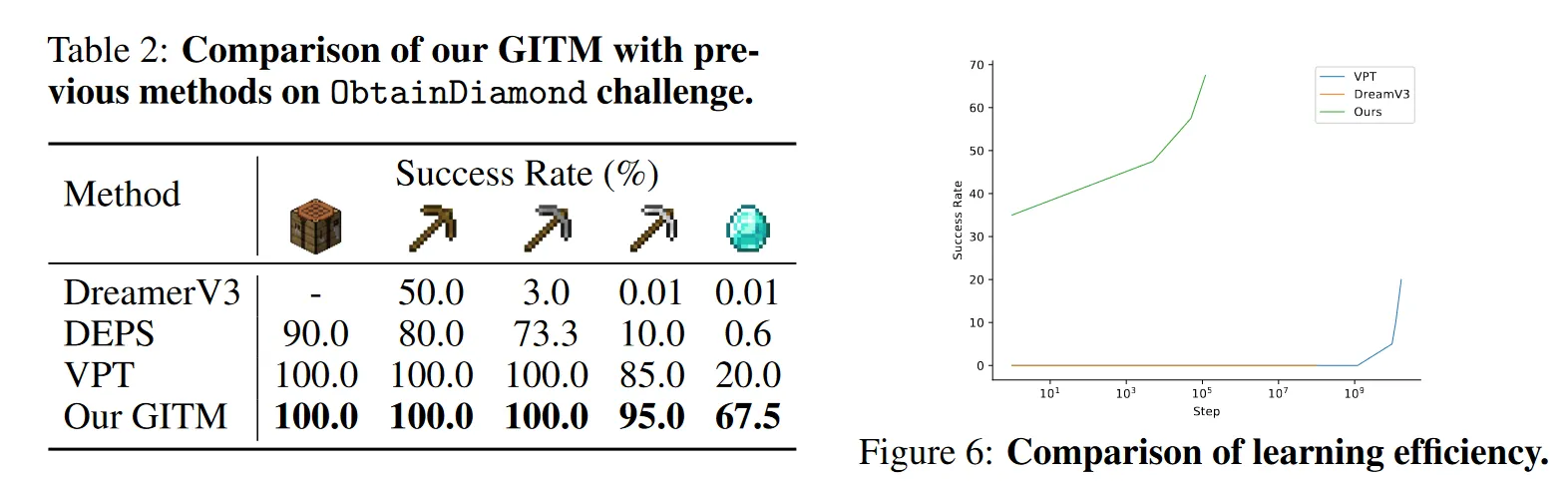

In the Minecraft environment, the method proposed in this article has achieved very good results:

Compared to the current state-of-the-art reinforcement learning algorithms, GITM almost appears in the Minecraft world in a crushing manner. Its entire process is so reasonable that even such exaggerated performance comparisons do not make people overly skeptical. The performance of GITM indicates one thing: in areas where humans have already mastered, GPT has the potential to achieve a very high level of intelligence through "looking up strategies." And a large amount of human knowledge and technology are presented in text form. Therefore, the potential of this path is enormous.

Nevertheless, I still believe that we are far from true intelligence. GPT is really too large, and the amount of training data and computational power it requires is too massive compared to its capabilities. It must be admitted that Chat GPT has made a big step forward on the road to AGI, but compared to true intelligence, it is more like a different kind of search engine, a strategy manual, and even today it still cannot guarantee reliability over a large range. But it does give us a glimpse of some shadows of general artificial intelligence. Finally, I hope that in our lifetime, we can see true intelligence emerge.