Xjtu System Optimization And Scheduling Course Assignments

西安交通大学研究生《系统优化与调度》课程作业

Assignment 1

(1)1.1.2

(a)

\[ f(x,y)=(x^2-4)^2+y^2\\\\ \nabla f(x,y)=(4x^3-16,2y)\\\\ \nabla^2f(x,y)= \begin{pmatrix} 12x^2-16 & 0\\ 0 & 2 \end{pmatrix} \]

so there are three stationary points: (2, 0) (-2, 0) and (0, 0)

the Hesse Matrix is positive semi-definite only when \(|x|>\frac43\)

so points (2, 0) (-2, 0) are local minimum points, point (0, 0) is not a local minimum. \[ f(2,0)=f(-2,0)=0 \] so (2,0) and (-2,0) are global minimum points of f

let \(g(x,y)=-f(x,y)=-(x^2-4)^2-y^2\), it is easy to know that Hesse matrix is not positive semi-definite when \(x=0,y=0\). So the point(0,0) is not the minimum of \(g(x,y)\), which means it is also not the local maximum of \(f(x,y)\).

(b)

\[ f(x,y)=\frac12x^2+xcos\ y\\\\ \nabla f(x,y)=(x+cos\ y,-xsin\ y) \]

\(-xsin\ y=0\) only if \(x=0\) or \(y=k\pi\ (k\in Z)\)

if \(x=0\), \(x+cos\ y=0\) only if \(y=\frac\pi2+k\pi\ (k\in Z)\)

if \(y=k\pi\ (k\in Z)\), \(x+cos\ y=0\) only if \(x=-(-1)^k\)

so the stationary points of \(f(x,y)\) are \((0, \frac\pi2+k\pi)\) and \((-(-1)^k,k\pi)\) \((k\in Z)\) \[ \nabla^2f(x,y)= \begin{pmatrix} 1 & -sin\ y\\ -sin\ y & -xcos\ y \end{pmatrix} \]

\[ |(1)|>0\\ |\nabla^2f(x,y)|=-xcos\ y-sin^2\ y \]

for points \((0, \frac\pi2+k\pi)\), \[ |\nabla^2f(x,y)|=0-1=-1<0 \] for points \((-(-1)^k,k\pi)\), \[ |\nabla^2f(x,y)|=-1-0=-1<0 \] so all the stationary points are not local minimum points.

function \(f(x,y)\) have no local minima

(c)

\[ f(x,y)=sin\ x+sin\ y+sin\ (x+y)\\\\ \nabla f(x,y)=(cos\ x+cos\ (x+y),cos\ y+cos\ (x+y))\\\\ \nabla^2f(x,y)= \begin{pmatrix} -sin\ x-sin(x+y) & -sin(x+y)\\ -sin\ (x+y) & -sin\ y-sin(x+y) \end{pmatrix} \]

\[ let \left\{ \begin{array}{**lr**} cos\ x+cos\ (x+y)=0&(1)\\ cos\ y+cos\ (x+y)=0&(2) \end{array} \right. \]

(1)-(2): \(cos\ x-cos\ y=0\), so \(y=x\ or\ y=2\pi-x\)

if \(y=x\), (1) equals to \(cos\ x+cos\ 2x=0\),

using double angle formula: \(cos\ x+2cos^2\ x-1=0\)

solution: \(cos\ x=\frac12\ ,\ -1\), \(x=\frac\pi3,\pi,\frac53\pi\)

if \(y=2\pi-x\), (1) equals to \(cos\ x+1=0\)

solution: \(cos\ x=-1\), \(x=\pi\)

The stationary points are \((\frac\pi3,\frac\pi3),(\pi,\pi),(\frac53\pi,\frac53\pi)\). \[ |\nabla^2f(x,y)|=sin\ xsin\ y+(sin\ x+sin\ y)sin\ (x+y) \]

for point \((\frac\pi3,\frac\pi3)\): \[ |(-sin\ x-sin(x+y))|=-\sqrt 3\\ |\nabla^2f(x,y)|=\frac94 \] its Hesse Matrix is negative definite, so point \((\frac\pi3,\frac\pi3)\) is a local maximum point, max value is \(\frac32\sqrt3\)

for point \((\pi,\pi)\): \[ |(-sin\ x-sin(x+y))|=0\\ |\nabla^2f(x,y)|=0 \] this point is neither a local maximum nor a local minimum.

for point \((\frac53\pi,\frac53\pi)\): \[ |(-sin\ x-sin(x+y))|=\sqrt3\\ |\nabla^2f(x,y)|=-\frac34 \] this point is neither a local maximum nor a local minimum.

(d)

\[ f(x,y)=(y-x^2)^2-x^2\\\\ \nabla f(x,y)=(4x^3-4xy-2x,-2x^2+2y)\\\\ \nabla^2f(x,y)= \begin{pmatrix} 12x^2-4y-2 & -4x\\ -4x & 2 \end{pmatrix} \]

\[ let \left\{ \begin{array}{**lr**} 4x^3-4xy-2x=0\\ -2x^2+2y=0 \end{array} \right. \]

Thus the stationary point is \((0,0)\), for this point: \[ |(12x^2-4y-2)|=-2\\ |\nabla^2f(x,y)|=-4 \] its Hesse Matrix is neither positive definite nor negative definite, so this stationary point is neither a local maximum nor a local minimum.

(e)

Since the conclusion of (d)——the stationary point is neither a local maximum nor a local minimum, so the minimum point could only exist on the boundary.

Consider \(y=-1\), then \(f(x,y)=(-1-x^2)^2-x^2=x^4+x^2+1\).

In this case, the minimum point is \((0,-1)\) and the minimum is 1

Consider \(y=1\), then \(f(x,y)=(1-x^2)^2-x^2=x^4-3x^2+1\)

In this case, the minimum points are \((\frac{\sqrt6}2,1), (-\frac{\sqrt6}2,1)\), and the minimum is \(-\frac54\).

Summarizing, with the constraint \(-1\leq y\leq 1\) on \(y\), there exists global minimum and the global minimum points are \((\frac{\sqrt6}2,1), (-\frac{\sqrt6}2,1)\), the global minimum is \(-\frac54\).

(2)1.1.6

suppose \(x=(a,b),y_i=(a_i,b_i)\), then the problem could transform to: \[ min\ \sum_{i=1}^mw_i\sqrt{(a-a_i)^2+(b-b^i)^2} \] suppose \(f(a,b)=\sum_{i=1}^mw_i\sqrt{(a-a_i)^2+(b-b^i)^2}\), then: \[ \nabla f(x,y)=(\sum_{i=1}^mw_i\frac{a-a_i}{\sqrt{(a-a_i)^2+(b-b^i)^2}},\sum_{i=1}^mw_i\frac{b-b_i}{\sqrt{(a-a_i)^2+(b-b^i)^2}})\\\\ \nabla^2f(a,b)= \begin{pmatrix} \sum_{i=1}^mw_i\frac{2(b-b_i)^2}{\sqrt{((a-a_i)^2+(b-b^i)^2)^3}} & \sum_{i=1}^mw_i\frac{-2(a-a_i)(b-b_i)}{\sqrt{((a-a_i)^2+(b-b^i)^2)^3}}\\ \sum_{i=1}^mw_i\frac{-2(a-a_i)(b-b_i)}{\sqrt{((a-a_i)^2+(b-b^i)^2)^3}} & \sum_{i=1}^mw_i\frac{2(a-a_i)^2}{\sqrt{((a-a_i)^2+(b-b^i)^2)^3}} \end{pmatrix} \] let \(\nabla f(a,b)=0\), then the stationary point is \((\frac{\sum_{i=1}^mw_ia_i}{\sum_{i=1}^mwi},\frac{\sum_{i=1}^mw_ib_i}{\sum_{i=1}^mwi})\)

and \(\nabla^2 f(a,b)\) is positive definite in the point, so it is the global minimum.

(b)

there is only one stationary point in this question, so the solution is unique

(C)

let the plane points on be the reference level

suppose the length of string in weight \(i\) is \(l_i\), so the problem is: \[ min\ \sum_{i=1}^mw_i(||x-y||-l_i)=\sum_{i=1}^mw_i||x-y||-\sum_{i=1}^mw_i·l_i \] and we can see that \(\sum_{i=1}^mw_i·l_i\) is constant, so the problem could transform to: \[ \sum_{i=1}^mw_i||x-y|| \] which is same as the problem in (a).

(3) 1.2.1

(a)

\[ f(x,y)=3x^2+y^4\\\\ \nabla f(x,y)=(6x,4y^3) \]

the start point is \((1,-2)\), so the descend direction is \((-6,32)'\). Then choose \(m\)

at first, \(m=0,\alpha=s=1\) \[ f(1,2)-f(-5,30)=19-810075=-810056\leq-0.1*1*-1060=106 \]

then \(m=1,\alpha=\alpha*\beta=0.5\) \[ f(1,-2)-f(-2,14)=19-38428=-38409\leq -0.1*0.5*-1060=53 \] then \(m=2,\alpha=\alpha*\beta=0.25\) \[ f(1,-2)-f(-0.5,6)=19-1296.75=-1277.75\leq -0.1*0.25*-1060=26.5 \] then \(m=2,\alpha=\alpha*\beta=0.125\) \[ f(1,-2)-f(0.25,2)=19-16.1875\leq -0.1*0.125*-1060=13.25 \] then \(m=3,\alpha=\alpha*\beta=0.0625\) \[ f(1,-2)-f(0.625,0)=19-1.171875=17.828\geq -0.1*0.0625*-1060=6.625 \]

so the first stepsize is 0.0625, after the iteration, the point changes to \((0.625, 0)\)

(b)

when \(m=0,\alpha=s=1\), the result is above.

then \(m=1,\alpha=\alpha*\beta=0.1\) \[ f(1,-2)-f(0.4,1.2)=19-2.5536=16.4464\geq -0.1*0.1*1060=-10.6 \] so the first stepsize is 0.1, after the iteration, the point changes to \((0.4, 1.2)\)

(c)

\[ \nabla^2f(x,y)= \begin{pmatrix} 6&0\\ 0&12y^2 \end{pmatrix} \]

at point \((1,-2)\), the Hesse Matrix is: \[ \begin{pmatrix} 6&0\\ 0&48 \end{pmatrix} \] its inverse matrix is: \[ \begin{pmatrix} \frac16&0\\ 0&\frac1{48} \end{pmatrix} \] so the descend direction is: \[ d=-\begin{pmatrix} \frac16&0\\ 0&\frac1{48} \end{pmatrix}\begin{pmatrix} 6\\-32 \end{pmatrix}=\begin{pmatrix} -1\\\frac23 \end{pmatrix} \] then choose the stepsize:

at first, \(m=0,\alpha=s=1\) \[ f(1,-2)-f(0,-\frac43)=19-3.16=15.84\geq -0.1*1*1060=-106 \] so the first stepsize is 1, and the point changes to \((0,-\frac43)\)

Comparing with (a), the computing cost of Newton's method is less

(4) 1.2.6

(a)

PROOF: \[ \nabla f(x)=Qx-b'\\\\ ||\nabla f(x)-\nabla f(y)||=||Qx-Qy||\leq ||Q||·||x-y||=L·||x-y|| \] (b)

PROOF: \[ x^{k+1}=x^k-sD\nabla f(x^k)=x^k-sD(Qx^k-b')\\ x^{k+1}=(I-sDQ)x^k+sDb' \] ICBS: \[ x^{k+1}+C=A(x^k+C)\\where\ \ A=I-sDQ,B=sDb', C=(A-I)^{-1}B \] by iteration: \[ x^{k+1}+C=A(x^k+C)=A^{k+1}(x^0+C)\\ x^{k+1}=A(x^k+C)=A^{k+1}(x^0+C)-C \] then: \[ x^{k+1}=(I-sDQ)^{k+1}(x^0-Q^{-1}b')+Q^{-1}b'\\ x^{k+1}-x^*=(I-sDQ)^{k+1}(x^0-Q^{-1}b')+Q^{-1}b'-Q^{-1}b'\\ x^{k+1}-x^*=(I-sDQ)^{k+1}(x^0-Q^{-1}b')\\ \] so: \[ \lim_{k\rightarrow\infty}(x^{k+1}-x^*)=\lim_{k\rightarrow\infty}(I-sDQ)^{k+1}(x^0-Q^{-1}b') \]

\[ \forall s\in(0,2/L),\quad (I-sDQ)<1 \]

then: \[ \lim_{k\rightarrow\infty}(I-sDQ)^{k+1}(x^0-Q^{-1}b')=0\\ \lim_{k\rightarrow\infty}(x^{k+1}-x^*)=0 \] Summarizing, the method converges to \(x^*=Q^{-1}b'\) for every starting point \(x^0\) if and only if \(s\in(0,2/L)\).

(5) 1.3.1

\[ Q=\begin{pmatrix} 1&0.9995\\ 0.9995&1 \end{pmatrix} \]

\[ M=largest\ eigenvalue\ of\ Q= 1.9995\\\\ m=smallest\ eigenvalue\ of\ Q= 0.0005\\\\ \frac{f(x^{k+1})}{f(x^{k})}\leq (\frac{M-m}{M+m})^2= 0.999 \]

Let \(v_m\)and \(v_M\) be the normalized eigenvectors of Q corresponding

to m and M, respectively, and let \[ x^0=\frac smv_m\pm\frac sMv_M, s\in R \] Then after the first iteration using the line minimization stepsize

rule,(i.e., a stepsize\(\alpha=\frac2{M+m}\) ), we get \[ x^1=\frac{M-m}{M+m}(\frac smv_m\mp\frac sMv_M)=\frac{M-m}{M+m}x^0 \] Therefore, starting the iterations with\(x^0\) , we have for all k \[ \frac{f(x^{k+1})}{f(x^{k})}= (\frac{M-m}{M+m})^2 \]

(6) 1.3.4

\[ f(x)=\frac12(x-x^*)'Q(x-x^*)\\\\ \nabla f(x)=Q(x-x^*) \]

then: \[ x^{k+1}=x^k-s(Q(x^k-x^*)+e^k)\\ x^{k+1}=x^k(I-sQ)+sQx^*+se^k\\ x^{k+1}-x^*=(x^k-x^*)(I-sQ)+se^k \] since for any \(A B, ||A·B||\leq||A||·||B||\) \[ ||x^{k+1}-x^*||\leq ||(x^k-x^*)(I-sQ)||+s||e^k|| \] then: \[ ||x^{k+1}-x^*||\leq ||x^k-x^*||·q+s\delta \] by the iteration, \[ ||x^k-x^0||\leq q^k||x0-x^k||+s\delta(1+q+q^2+...+q^{k-1}) \] so: \[ ||x^k-x^0||\leq q^k||x0-x^k||+\frac{s\delta}{1-q} \] i.e. \[ ||x^k-x^0||\leq \frac{s\delta}{1-q}+q^k||x0-x^k|| \]

(7) 1.4.1

In Newton's Method, we have: \[ x^{k+1}=x^k-\alpha^k(\nabla^2f(x^k))^{-1}\nabla f(x^k) \] consider \(x=Sy\), then: \[ Sy^{k+1}=Sy^k-S^{-2}\alpha^k(\nabla^2f(Sy^k))^{-1}S\nabla f(Sy^k)\\\\ y^{k+1}=y^k-S^{-1}\alpha^k(\nabla^2f(Sy^k))^{-1}\nabla f(Sy^k) \] since \(y=S^{-1}x\), consider \(y^0=S^{-1}x^0\), then: \[ y^{1}=y^0-S^{-1}\alpha^0(\nabla^2f(Sy^0))^{-1}\nabla f(Sy^0)\\ y^{1}=S^{-1}x^0-S^{-1}\alpha^0(\nabla^2f(x^0))^{-1}\nabla f(x^0) \] so \(y^1=S^{-1}x^1\), for \(y^k=S^{-1}x^k\): \[ y^{k+1}=y^k-S^{-1}\alpha^k(\nabla^2f(Sy^k))^{-1}\nabla f(Sy^k)\\ y^{k+1}=S^{-1}x^k-S^{-1}\alpha^k(\nabla^2f(Sy^k))^{-1}\nabla f(Sy^k)\\ y^{k+1}=S^{-1}x^{k+1} \] summarizing, the sequence made by Newton's Method \(y^k=S^{-1}x^k\).

## (8)

PROOF:

Since \(f(x)\) is convex, \[ \forall x_1,x_2\in \Omega,\lambda\in(0,1)\quad \lambda f(x_1)+(1-\lambda )f(x_2)\geq f(\lambda x_1+(1-\lambda )x_2) \] Since \(\gamma(x)\) is a monotone nondecreasing function, \[ \gamma(\lambda f(x_1)+(1-\lambda )f(x_2))\geq \gamma (f(\lambda x_1+(1-\lambda )x_2)) \] Since \(\gamma(x)\) is convex, \[ \lambda\gamma( f(x_1))+(1-\lambda )\gamma(f(x_2)) \geq \gamma(\lambda f(x_1)+(1-\lambda )f(x_2)) \] Therefore, \[ \lambda\gamma( f(x_1))+(1-\lambda )\gamma(f(x_2)) \geq \gamma (f(\lambda x_1+(1-\lambda )x_2)) \] This implies that \(\gamma(f)(x)\) is convex.

(9)

(a)

\[ f(x,y)=5x^2+5y^2-xy-11x+11y+11\\\\ \nabla f(x,y)=(10x-y-11,10y-x+11) \]

\[ let\left\{ \begin{array}{**lr**} 10x-y-11=0\\ 10y-x+11=0 \end{array} \right. \]

the solution of the equation set above is \((1,-1)\),

it is the point satisfying the first-order necessary conditions

(b)

\[ \nabla^2 f(x,y)= \begin{pmatrix} 10&-1\\ -1&10 \end{pmatrix} \]

it is easy to know the Hessian Matrix is always positive definite, so the point\((1,-1)\) is a global minimum point, and the global minimum is 0

(c)

for the steepest decent method, \(D^k=I\) \[ (D^k)^{\frac12}\nabla^2f(x,y)(D^k)^{\frac12}=\nabla^2f(x,y)=\begin{pmatrix} 10&-1\\ -1&10 \end{pmatrix} \]

\[ M=11,\quad m=9\\ \frac{f(x^{k+1})-f(x^*)}{f(x^{k})-f(x^*)}\leq(\frac{M-m}{M+m})^2=0.01 \]

(10)

(a)

\[ f(x,y)=x^2+y^2+xy-3x\\\\ \nabla f(x,y)=(2x+y-3,x+2y)\\\\ \nabla^2f(x,y)= \begin{pmatrix} 2&1\\ 1&2 \end{pmatrix} \]

\[ let\left\{ \begin{array}{**lr**} 2x+y-3=0\\ x+2y=0 \end{array} \right. \]

the solution of the equation set above is \((2,-1)\), and the Hessian Matrix is always positive definite,

so point \((2,-1)\) is unconstrained local minimum point.

(b)

Because Hessian Matrix is always positive definite, the function \(f(x,y)\) is convex for all point in the real space.

(c)

the minimum points must on the boundary of the constraint.

consider \(x=0\), then \(f(x,y)=y^2\), the minimum point is \((0,0)\) and the minimum is \(0\)

consider \(y=0\), then \(f(x,y)=x^2-3x\), the minimum point is \((\frac32,0)\) and the minimum is \(-\frac94\)

therefore, the minimum point of f subject to \(x\geq 0,y\geq 0\) is \((\frac32,0)\) and the minimum is \(-\frac94\)

(d)

for the steepest decent method, \(D^k=I\) \[ (D^k)^{\frac12}\nabla^2f(x,y)(D^k)^{\frac12}=\nabla^2f(x,y)=\begin{pmatrix} 2&1\\ 1&2 \end{pmatrix} \]

\[ M=3,\quad m=1\\ \frac{f(x^{k+1})-f(x^*)}{f(x^{k})-f(x^*)}\leq(\frac{M-m}{M+m})^2=\frac14 \]

Assignment 2

(1)

Q: Consider the quadratic program \[ min\ 0.5x^TQx-b^Tx\\ s.t.\ Ax=c \] Prove that \(x^*\) is a local minimum point if and only if it is a global minimum point.(No convexity is assumed)

Proof:

Since no convexity is assumed, we consider two cases:

If Q is a positive definitive matrix. It’s obvious that \(x^*\) is a local minimum point if and only if it is a global minimum point.

If Q is not a positive definitive matrix.

Assume \(x^*\) is a local minimum point, set \(x^*+d\) as the global minimum point.

so \(A(x^*+d)=c\)

and because \(A(x^*)=c\)

so \(Ad=0\)

so we have \(A(x*+2d)=c\)

then according to the optimality condition, we have \[ \nabla f(x^*+d)'((x^*+2d)-(x^*+d))\geq0\\ (Q(x^*+d)-b')d\geq0 \] and we have \[ \begin{align} f(x^*+d)&=0.5(x^*+d)'Q(x^*+d)-b'(x^*+d)\\ &=0.5x^{*'}Qx^*-bx^*+(Q(x^*+d)-b')d-0.5d'Qd\\ &=f(x^*)+(Q(x^*+d)-b')d-0.5d'Qd\\ \end{align} \] as \((Q(x^*+d)-b')d\geq0\) and \(-0.5d'Qd\geq0\)

then \(f(x^*+d)\geq f(x^*)\)

and because \(f(x^*+d)\) is the global minimum, so we can get that \(f(x^*+d)=f(x^*)\). and then we can conclude that the local minimum is the global minimum.

(2)

Suppose that the projected negative nablaient d is calculated satisfying \[ -g=d+A_q^T\lambda \] and that some component \(\lambda_i\) of \(\lambda\), corresponding to an inequality, is negative. Show that if the \(i\)th inequality is dropped, the projection \(d_i\) of the negative nablaient onto the remaining constraints is a feasible direction of decent

Proof:

Let the new direction vector \(d_i\) by relaxing the \(i\)th constraint and projecting the negative nablaient onto the subspace determined by remaining \(q-1\) active constraints.

Let \(A_\bar q\) denote the matrix \(A_q\) with row \(a_j\) deleted.

We know that \(g^Td_i<0\) .

Multiplying the equation \(-g=d_i+A^T_\bar q\lambda_i\) by \(d_i\) and using \(A_\bar q d_i=0\) we obtain: \[ 0>g^Td_i=-\lambda_ia_i^Td_i \] Since \(\lambda_i<0\) , we conclude that \(d_i^Td_i<0\) .

So the vector \(d_i\) is a direction of descent, but it is a feasible direction.

(3) 3.1.9

(a)

\[ min\ x+y+z\\ s.t. xyz=\rho^2(x+y+z) \]

consider the Lagrangian Relaxation: \[ L(x,y,z,\lambda)=x+y+z+\lambda(xyz-\rho^2(x+y+z)) \] let \(\nabla L=0\), then we have \[ \left\{ \begin{array}{**lr**} 1+\lambda(yz-\rho^2)=0\\ 1+\lambda(xz-\rho^2)=0\\ 1+\lambda(xy-\rho^2)=0\\ xyz=\rho^2(x+y+z) \end{array} \right. \] then we have: \[ \frac{y+z}x=\frac{x+z}y=\frac{x+y}z \] so \(x=y=z\)

(b)

consider all lines parallel to the line that passes through \(a\) and \(b\) and

intersect the circle.

the maximal area appears only if the point \(x\) is the point of tangency between the parallel line and the circle.

and we can see that the line through \(x\) and \(\hat x\) is also the line perpendicular to the tangent line and through point \(x\).

so this line must passes through the center of the circle.

(c)

Fix two vertices and the corresponding side of the corresponding side of the triangle and optimize vertex and the vertex of the circle should be orthogonal to the fixed side. Hence, the optimal is obtained when the two remaining side are equal. Repeating this argument, fixing each side of the triangle in turn, we see that all sides should be equal.

(d)

Similar line of argument as in (c).

(4) 3.3.5

(a)

Let \(d\in \bar F(x^*)\), so \(\exists \{d^k\}\subset F(x^*)\), so that \(d^k\rightarrow d\).

And we have: \[ \nabla f(x^*)'d^k=lim_{\alpha\rightarrow0}\frac{f(x^*+\alpha d^k)-f(x^*)}\alpha \]

Because \(x^*\) is a constrained local minimum, so\(\frac{f(x^*+\alpha d^k)-f(x^*)}\alpha\geq0\) .

So, \(\nabla f(x^*)'d^k\geq0\).

(b)

If x* is a constrained local minimum, from (a) we can get \[ \nabla f(x^*)'d^k\geq0,\ \forall d\ with\ \nabla g_j(x^*)'d\leq0,\ \forall j\in A(x^*) \] According to Farkas’Lemma, it is true iff there exists \(u^*\) such that \[ -\nabla f(x^*)=\sum_{j\in A(x^*)}u_j\nabla g_j(x^*),\ u_j^*\geq0 \] Setting \(u_j^*=0\) for \(j\notin A(x^*)\) .

we have the desired result.

(c)

We want to show that \(\bar F(x^*)=V(x)\), where \(V(x^*)\) is the cone of first order feasible variations given by \(V(x^*)=\{d|\nabla g_j(x^*)'d\leq0,\ \forall j\in A(x^*\}\).

From Mean Value Theorem, for each \(j \in A(x^*)\) and for any \(d\in F(x^*)\) there exist \(\varepsilon\in[0,1]\) such that \(g_j(x^*+\alpha d)=g_j(x^*)+\alpha\nabla g_j(x^*+\varepsilon\alpha d)'d\).

Because \(g_j(x^*+\alpha d)\leq0\), so \[ \lim_{\alpha\rightarrow0}\alpha\nabla g_j(x^*+\varepsilon\alpha d)'d\leq0 \] And that implies that $g_j(x^)d,jA(x^ $, so that \(d\in V(x^*)\). Therefore \(F(x^*)\subset V(x)\), since \(V(x^*)\) is closed, so \(\bar F(x^*)\subset V(x)\).

From each of 1-4, we can also prove that \(\bar F(x^*)\supset V(x)\).

So we can get that $ F(x^*)=V(x)$.

(d)

We can easily get the local minimum is \(x^*= (0,0)\). So we have \[ \nabla g_1(0,0)=(0,1)^T\\ \nabla g_2(0,0)=(0,-1)^T \] Obviously g1 and g2 are not linear, so the condition(1) is violated. Furthermore, there is no vector \(d= (d1,d2)’\) such that \[ \nabla g_1(0,0)'d=d_2<0,\ \nabla g_1(0,0)'d=-d_2<0 \]

So the condition2 is violated. Similarly, condition3 is violated. The vectors \(\nabla g_1(0,0)\) and \(\nabla g_2(0,0)\) are linearly dependent since \(\nabla g_1(0,0)=-\nabla g_2(0,0)\), so the condition4 is violated.

Assume \(u_0\nabla f(x^*)+u_1\nabla g_1(x^*)+u_2\nabla g_2(x^*)=0\), so we have: \[ (u_0,u_0)'+(0,u_1)'+(0,u_2)'=(0,0)' \] It follows that \(u_0=0\). So there is no Lagrange multiplier.

(e)

From the description we know that \(x^*\) is also a local minimum for the modified problem.

The modified problem has a single constraint \(g_1(x)=||h(x)||^2\), which is active at \(x^*\).

Since \(g_1\) is not linear, the condition 1 is violated.

Because \(\nabla g_1(x^*)=2\nabla h(x^*)\nabla h(x^*)=0\), the conditions 2 and 4 are violated. If condition 3 holds, we can prove that condition 2 is hold too, so condition 3 is violated, too. From \(u_0^*\nabla g(x^*=-u_1^*\nabla g_1(x^*))\) and \(\nabla g_1(x^*)=0\), it follows that \(u_0^*=0\), so there is no Lagrange multiplier.

(5) 5.1.7

(a)

\[ let \ x_i=\left\{ \begin{array}{**lr**} 0 & if\ the\ object\ is\ included\ in\ the\ subset\\ 1 & otherwise \end{array} \right. \]

Then the total weight and value of objects included is \(\sum_iw_ix_i\) and iv_ix_i, respectively, and the problem can be written as $$ max f(x)={i=1}^nv_ix_i\

- t._{i=1}^nw_ix_iA, xi ∈ {0, 1}, i= 1, . . . , n. $$

(b)

Let \(\hat f(x)=-f(x)\) and consider the equivalent problem of minimizing \(\hat f(x)\) subject to the constraints given above. Then \[ L(x,\mu)=-\sum_{i=1}^nv_ix_i+\mu(\sum_{i=1}^nw_ix_i-A) \] and \[ \hat q(u)=\inf_{x_i\in\{0,1\}}\{\sum_{i=1}^n(\mu w_i-v_i)x_i-\mu A\} \] Note that the minimization above is a separable problem, and the minimum is attained at \[ \hat x_i(\mu)= \left\{ \begin{array}{**lr**} 0& if\ \mu<v_i/w_i\\ 1& if\ \mu>v_i/w_i\\ o\ or\ 1 & otherwise \end{array} \right. \] Without loss of generality, assume that the objects are ordered such that: \[ \frac{v_1}{w_1}\leq\frac{v_2}{w_2}\leq...\leq\frac{v_n}{w_n} \] When\(\mu\in(\frac{v_{j-1}}{w_{j-1}},\frac{v_j}{w_j}]\) for some \(j\) with \(1≤j ≤n\), then \(\hat x_i=1\) for all \(i≥j\).

And $ x_i() = 0$ otherwise, and \[ \hat q(\mu)=u(\sum_{i=1}^nw_i-A)-\sum_{i=1}^nv_i \] From this relation, we see that, as μ increases, the slope of \(\hat q(u)\) decreases from \(\sum_{i=1}^nw_i-A\) to \(−A\). Therefore, if $ _{i=1}^nw_i-A> 0$, \(\hat q(u)\) is maximized when the slope of the curve goes from positive to negative. In this case, the dual optimal value \(\hat q^*\) is attained at \(u^*=\frac{v_i^*}{w_i^*}\), where\(i^*\)is the largest \(i\) such that \[ w_1+w_2+...+w_n\geq A \]

If \(\sum_{i=1}^nw_i-A ≤ 0\), then the dual optimal value \(\hat q^*\)is attained at \(u^* = 0\).

(c)

Consider a relaxed version of the problem of minimizing \(\hat f\): $$ max f_R(x)=-_{i=1}^nv_ix_i\

- t._{i=1}^nw_ix_iA, xi ∈ [0, 1], i= 1, . . . , n. $$ Let \(f_R^*\) and \(q_R^*\) be the optimal values of the relaxed problem and its dual, respectively.

In the relaxed problem, the cost function is convex over \(R^n\)(in fact it is linear), and the constraint set is polyhedral.

Thus, according to Prop. 5.2.1, there is no duality gap, and \(f_R^*=q_R^*\) . The dual function of the relaxed problem is: \[ q_R(\mu)=\inf_{x_i\in\{0,1\}}\{\sum_{i=1}^n(\mu w_i-v_i)x_i-\mu A\} \]

Again, \(q_R(x)\)is separable and the minimum is attained at \[ \hat x_i(\mu)= \left\{ \begin{array}{**lr**} 0& if\ \mu<v_i/w_i\\ 1& if\ \mu>v_i/w_i\\ o\ or\ 1 & otherwise \end{array} \right. \] Thus the solution is the same as the \(\{0, 1\}\) constrained problem for all \(i\) with \(\mu\neq\frac{v_i}{w_i}\). For \(i\) with\(\mu=\frac{v_i}{w_i}\), the value of \(x_i\) is irrelevant to the dual function value. Therefore, \(\hat q(u)=q_R(\mu)\) for all μ, and thus \(\hat q^*=q_R^*\).

Following Example 5.1.2, it can be seen that the optimal primal and dual solution pair \((x^∗, μ^∗)\) of the relaxed problem satisfies $$ μ∗ w_i = v_i , if 0 <x_i^*< 1,\

μ∗ w_i v_i , if x_i^*=0,\

μ∗ w_i v_i , if x_i^*= 1, $$

In fact, it is straightforward to show that there is an optimal solution of the relaxed problem such that at most one \(x^*_i\) satisfies \(0 < x_i^* < 1\). Consider a solution equivalent to this optimal solution with the exception that = 0 if 0 < < 1. This solution is clearly feasible for the {0, 1} constrained problem, so that we have \[ \hat f^*\leq \hat f(\hat x)\leq f_R^*+\max_{i\leq i\leq n}v_i \] Combining with earlier results, \[ \hat f^*\leq q_R^*+\max_{i\leq i\leq n}v_i=\hat q^*+\max_{i\leq i\leq n}v_i \] Since \(\hat f^*=-f\) and \(\hat q^*=-q^*\) we have the desired result.

(d)

Since the object weights and values remain the same we have from (c) that \(0\leq q^*(k)-f^*(k)\leq \max_{i\leq i\leq n}v_i\). By including in the subset each replica of an object assembled in the optimal solution to the original problem, we see that \(f^*(k)\geq kf^*\) . It then follows that \[ \lim_{k\rightarrow\infty}(q^*(k)-f^*(k))=0 \]

Assignment 3

5.5.2

\[ min\ \ f(x)=10x_1+3x_2\\ s.t.\ \ 5x_1+x_2\geq4\ \ x_1,x_2\in\{0,1\} \]

Using Lagrangian relaxiation:

the Lagrangian funcion is \[ L(x,\mu)=10x_1+3x_2+\mu(4-5x_1-x_2)=(10-5\mu)x_1+(3-\mu)x_2+4\mu \] the dual problem is: \[ max\ \ q(\mu)=\min_{x\in\{0,1\}}L(x,\mu)\\ s.t.\ \ \mu\geq0 \] the solution is \(\mu=2, q^*=8\)

Using constraint relaxation:

the releaxed problem is: \[ min\ \ f(x)=10x_1+3x_2\\ s.t.\ \ 5x_1+x_2\geq4\ \ x_1,x_2\in[0,1] \] (a) when \(x_1=0\), the prolem is: \[ min\ \ f(x)=3x_2\\ s.t.\ \ x_2\geq4\ \ x_2\in[0,1] \] this problem is infeasible.

- when \(x_1=1\), the problem is: \[ min\ \ f(x)=10+3x_2\\ s.t.\ \ 5+x_2\geq4\ \ x_2\in [0,1] \] when \(x_2=0\), the value of object function is minimization. so the solution is \((1,0)\), it is also integer solution, so the point \((1,0)\) is the optimal point of the primal problem, minimization is 10

so the lower bound of Lagrangian relaxiation is less than constraint relaxation.

5.5.10

\[ \begin{align*} \bar q&=\inf_{x\in \bar X}\{f(x)+\sum_{j=0}^{\bar r}\mu_jg_j(x)\}\\ &=\inf_{\ \ \ \ \ \ \ \ \ \ x\in X\\g_{\bar r+1}(x)\leq0...g_r(x)\leq0}\{f(x)+\sum_{j=1}^{\bar r}\mu_jg_j(x)\}\\ &\geq \inf_{\ \ \ \ \ \ \ \ \ \ x\in X\\g_{\bar r+1}(x)\leq0...g_r(x)\leq0}\{f(x)+\sum_{j=1}^{r}\mu_jg_j(x)\}\\ &\geq \inf_{x\in X}\{f(x)+\sum_{j=1}^{r}\mu_jg_j(x)\}\\ &=q \end{align*} \] so we have \(\bar q^*\geq q^*\), and we have \(\bar q^*\leq f^*\) by the weak duality theorem, so \(q^*\leq\bar q^*\leq f^*\)

if \(q^*=f^*\), and we have\(q^*\leq\bar q^*\leq f^*\)

so we can get \(q^*=\bar q^*=f^*\)

- \[ min\ \ f(x)=e^{-x_1x_2}\\ s.t.\ \ x_1=0,\ x_1-1\leq0,\ x\in X=\{x|x\geq0\} \]

\[ \bar X=\{x|x\geq0, x_1=0\} \]

and we can get \(q^*=0,\bar q^*=f^*=1\), so \(q^*<\bar q^*=f^*\)

6.3.1

Let \(\mu^∗\) be a dual optimal solution, we obtain: \[ ||\mu^{k+1}-\mu^*||^2\leq ||\mu^{k}-\mu^*||^2-2s^k(q^*-q(u^k))+(s^k)^2||g^k||^2 \] where \(q^*=q(\mu^*)\), \(s^k=\frac{q^*-q(\mu^k)}{||g^k||^2}\), and we have: \[ ||\mu^{k+1}-\mu^*||^2\leq ||\mu^{k}-\mu^*||^2-\frac{q^*-q(\mu^k)}{||g^k||^2} \] So \[ ||\mu^{k+1}-\mu^*||^2\leq ||\mu^{k}-\mu^*||^2,\ \ \forall k \] implying that \(\{\mu^k\}\) is bounded.

Let \(C\) be a positive constant such that \(||g^k|| ≤ C\) for all \(k\), then \[ ||\mu^{k+1}-\mu^*||^2+\frac{q^*-q(\mu^k)}{C^2}\leq ||\mu^{k}-\mu^*||^2 \] By summing these inequalities over all \(k\), we obtain \[ \frac1{C^2}\sum_{k=0}^\infty(q^*-q(\mu^k))^2\leq||\mu^0-\mu^*||^2 \] so \(\lim_{k\rightarrow \infty}q(\mu^k)=q^*\)

Since \({\mu^k}\) is bounded, there exist a vector \(\hat \mu\) and a subsequence ${^k}_{kK} {^k} $converging to \(\hat\mu ∈ M\). By using the upper-semicontinuity of q, we have \[ \lim_{k\rightarrow\infty}\sup_{k\in\mathcal K}q(\mu^k)\leq q(\hat \mu)\leq q^* \] Now we show that \(\{\mu^k\}\) actually converges. Let \(M^∗\) denote the set of all dual optimal solutions. Note that \(M^∗\) is convex (by concavity of \(q\)) and closed (by upper-semicontinuity of \(q\)). Suppose that \(\{\mu^k\}\) has two distinct limit points, say \(\hat\mu \in M^∗\) and \(\widetilde\mu \in M^∗\). As seen in (a), for any \(\mu^* \in M^∗\), the sequence \(\{||\mu^k − \mu^∗||\}\) decreases monotonically, and therefore it converges. Hence \(||\hat\mu − \mu^∗|| = ||\widetilde\mu − \mu^∗||\) for all \(\mu^* \in M^∗\), implying that \(\hat \mu=\widetilde \mu\).

Let \(q\) be real-valued and concave over the entire space \(\mathbb{R}^r\). According to Prop. B.24 of Appendix B, since \(\{\mu^k\}\) is bounded, the set \(∪_{k≥0}∂q(\mu^k)\) is bounded, and so is \(\{g^k\}\).

6.3.3

Suppose that \(\lim_{k\rightarrow\infty}\inf\sqrt{k}(q^*-q(\mu^k))>0\), Then there is an \(\epsilon>0\) and large enough \(\bar k\) such that \(\sqrt{k}(q^*-q(\mu^k))\geq\epsilon\) for all \(k\geq \bar k\), so \[ (q^*-q(\mu^k))^2\geq\frac{\epsilon^2}{k},\ \ \ \ \forall k\geq \bar k \] implying that \[ \sum_{k=\bar k}^\infty(q^*-q(\mu^k))^2\geq \epsilon^2\sum_{k=\bar k}^\infty\frac1k=\infty \] which contradicts the relation \[ \sum_{k=0}^\infty(q^*-q(\mu^k))^2<\infty \] (b)

As seen in 6.3.1, we have for all dual optimal solutions \(\mu^*\) and all \(k\) \[ ||\mu^{k+1}-\mu^*||^2\leq ||\mu^{k}-\mu^*||^2-\frac{q^*-q(\mu^k)}{||g^k||^2} \] This relation and the inequality \(q(\mu^∗) − q(\mu^k) ≥ a||\mu^∗ − \mu^k||\) yield for all \(k\) \[ ||\mu^{k+1}-\mu^*||^2\leq ||\mu^{k}-\mu^*||^2-\frac{a^2||\mu^k-\mu^*||^2}{||g^k||^2} \] from which, by using \(\sup_{k≥0} ||g^k||≤ b\), we obtain \[ ||\mu^{k+1}-\mu^*||^2\leq (1-\frac{a^2}{b^2})||\mu^{k}-\mu^*||^2 \] and the desired relation follows.

Assignment 4

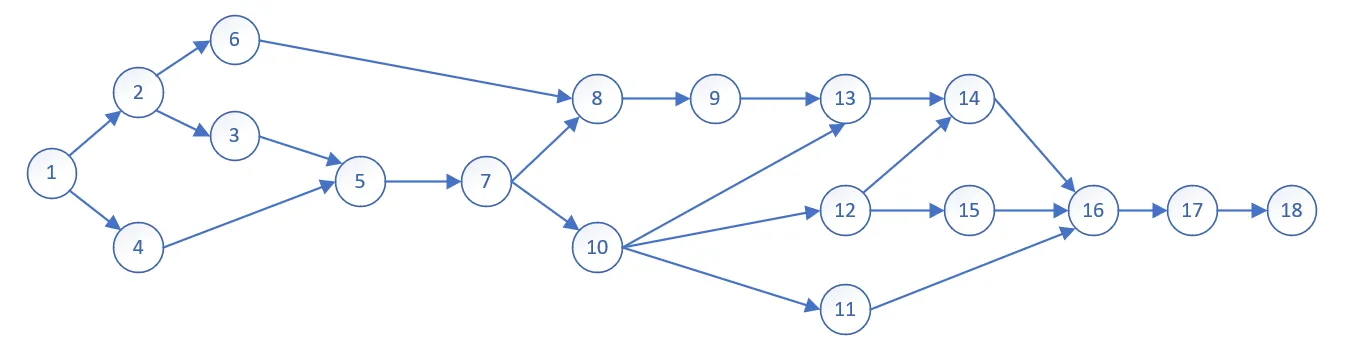

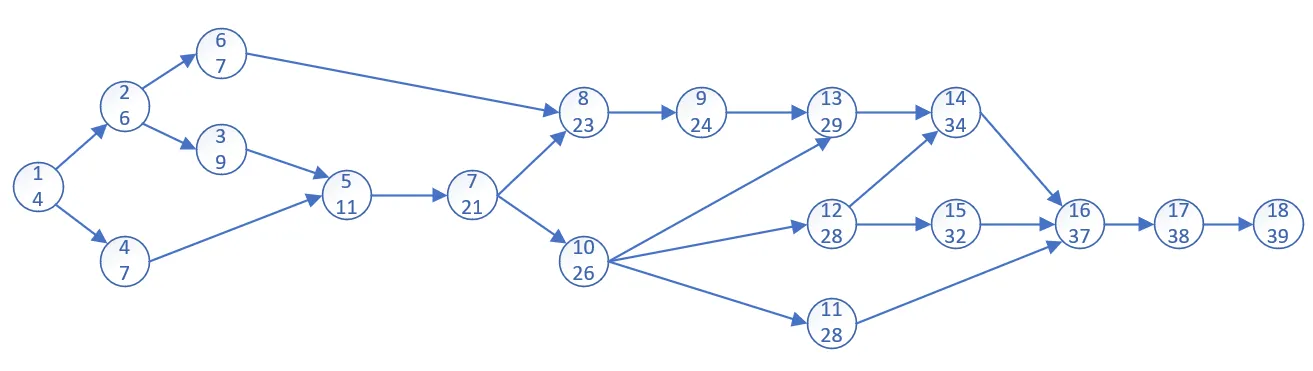

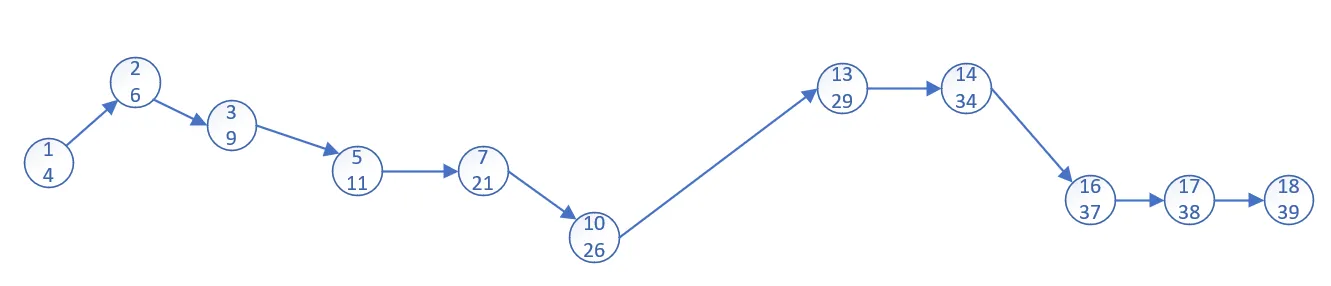

2.1

(a)

(b)

We cloud use dynamic program to solve this question, the computational process is following:

and the makespan of this project is 39 weeks

(c)

This is the most cost trace of the project, shorting each of them could save a week time.

job 7 (Walls) should be shorten most since it cost 10 weeks and all the following work must wait for its finishing.

2.4

(a)

The processor sharing is a service discipline where a number of jobs are processed simultaneously, it can be seen that the processing could be interrupt any time. For CPU, the processing already done on the preempted job is not lost, so the model of the processor sharing concept on CPU is Preemptive-Resume form.

(b)

Assume that the switch of job takes no time, there are \(n\) jobs need to be processed, each job has a weight \(w_j\) and process time \(c_j\). Function \(g(j,t)\) shows if the job \(j\) has been finished on time \(t\), if finished, \(g(j,t)=0\); else \(g(j,t)=1\). Function \(f(j,t)=1\) shows that job \(j\) are processed on time t, \(f(j,t)=0\) shows that job \(j\) are not processed on time t

We would like the cpu process the job with high weight as quickly as possible, so we get the following objective optimization problem: \[ min\ \ \sum_{j=1}^n\int_0^{+\infty}g(j,t)w_j\\ s.t.\ \ \sum_{j=1}^nf(j,t)\leq 1\\ \ \ \ \ g(j,t)=\left\{ \begin{array}{**lr**} 1 & if \int_0^tf(j,\tau)d\tau\geq c_j\\ 0 & otherwise \end{array} \right.\\ \int_0^{+\infty}f(j,\tau)d\tau=c_j \]

2.9

: